What is Cloud detecAon?

Cloud detection is a crucial process in Earth Observation(EO) used to identify and mask clouds in satellite imagery. This is necessary as clouds can obstruct the view of the Earth’s surface, making it difficult to accurately interpret and analyse the data.

Cloud detection algorithms typically use a combination of spectral and spatial information to differentiate between clouds and other features in the imagery. For instance, they may use information from various wavelengths of light to distinguish between clouds and land or water surfaces. They may also use contextual information, such as the size and shape of features in the image, to aid in cloud identification.

Once clouds are detected, they can be masked or removed from the image so that the underlying land or water surface can be analyzed. This is important for a wide range of applications, including land use and land cover mapping, crop monitoring, and climate studies.

Cloud detection is also used in real-time applications such as weather forecasting and disaster management, where monitoring cloud cover and its changes over time is crucial. In these applications, cloud detection algorithms can track the movement and formation of clouds, providing valuable information for predicting weather patterns and identifying areas that may be impacted by natural disasters.

Cloud detecAon Onboard

Performing cloud detection onboard EO satellites offers several benefits over performing cloud detection on the ground:

• Faster response time: Cloud detection onboard the satellite enables near real-time detection and removal of clouds, which is particularly useful for time-critical applications such as weather forecasting and disaster response.

• Reduced data transmission: Transmitting large amounts of satellite imagery data to the ground can be expensive and time-consuming. By performing cloud detection onboard, only the useful data (i.e. data without clouds) needs to be transmitted to the round, reducing data transmission costs.

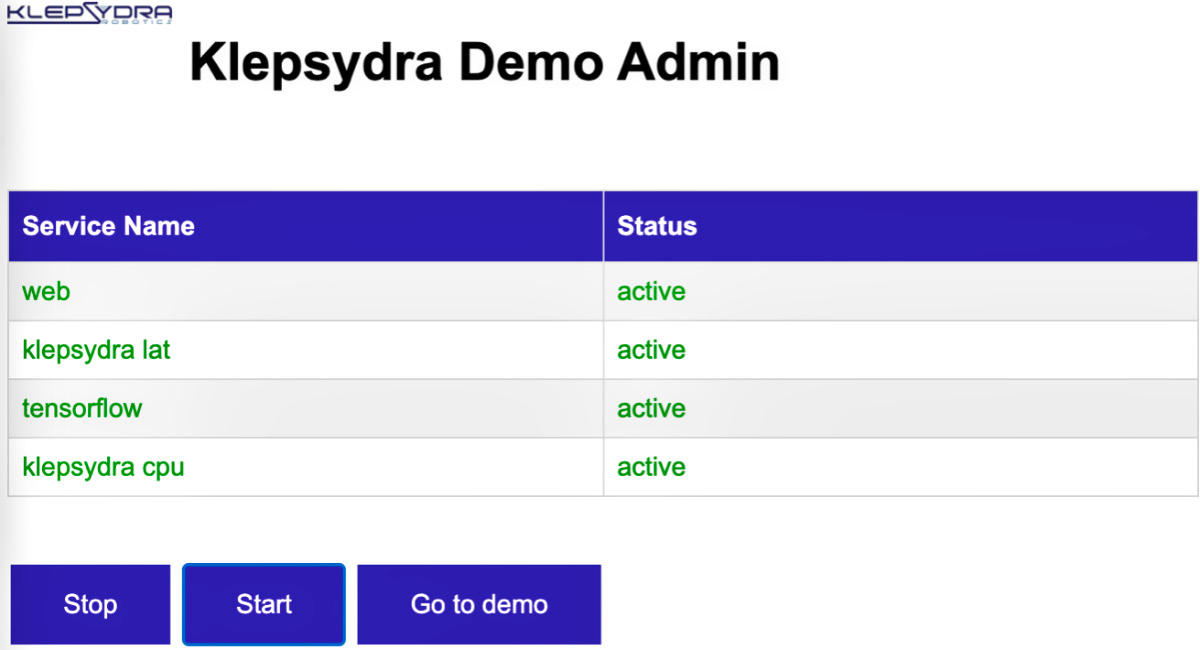

Image 1.

• Improved data quality: Cloud detection onboard the satellite can result in improved data quality because the detection algorithms can take into account the unique characteristics of the satellite’s sensors and the viewing geometry. This can result in more accurate and reliable cloud detection.

• Increased availability of cloud-free data: By performing cloud detection onboard, the satellite can provide a higher percentage of cloud-free data, which is particularly important for applications such as land use and land cover mapping, crop monitoring, and climate studies.

• Improved efficiency of downstream processing:

Cloud detection onboard the satellite can improve the efficiency of downstream processing by reducing the amount of data that needs to be processed on the ground. This can lead to faster and more accurate analysis of the data.

OBPMark-ML

OBPMark (On-Board Processing Benchmarks) is a set of computational performance benchmarks developed specifically for on-board spacecraft data processing applications, such as image and radar processing, data and image compression, signal processing, and machine learning.

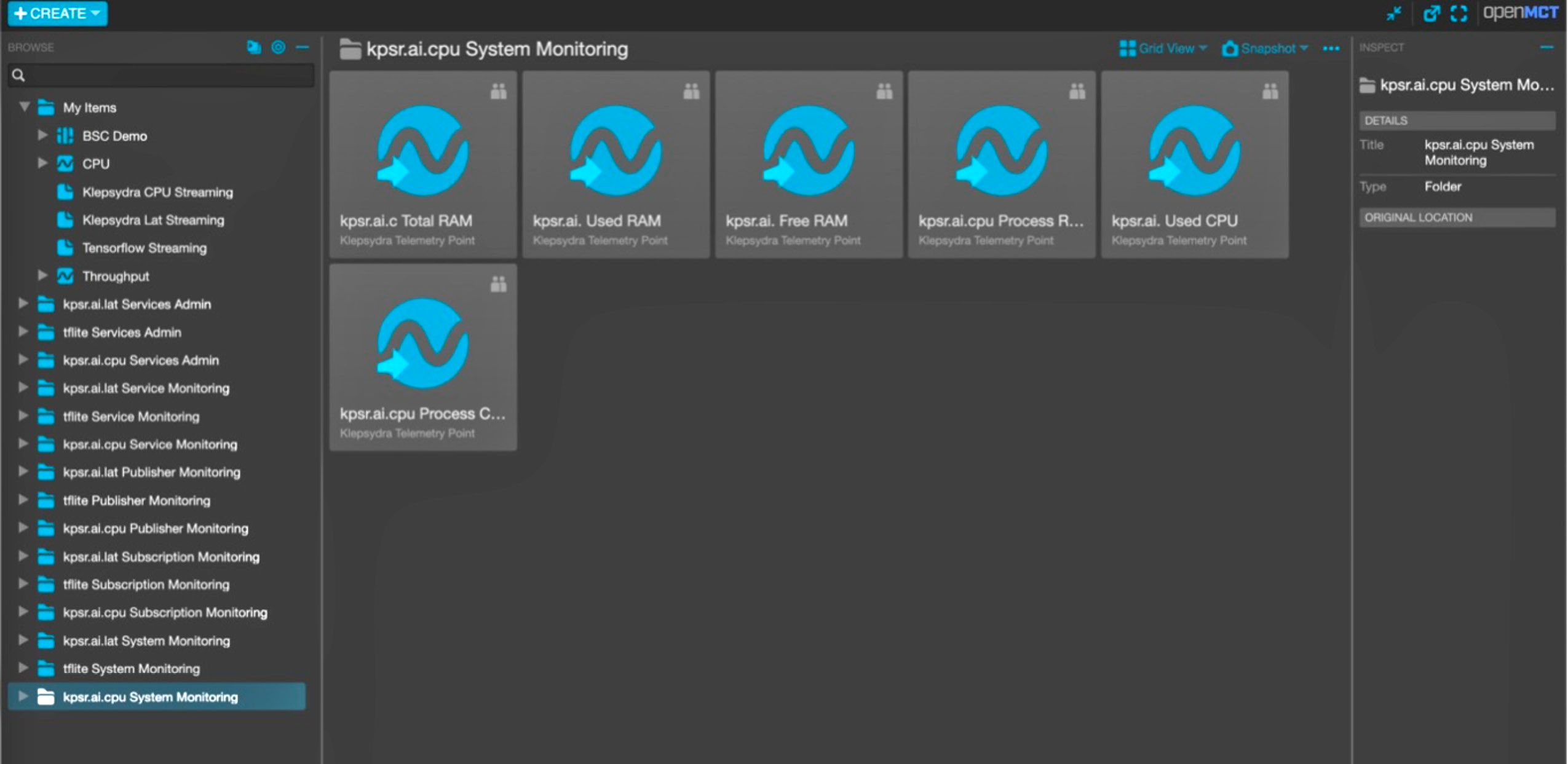

Image 2.

As part of OBPMark, a cloud detection AI algorithm was developed by the Barcelona Supercomputing Center and ESA. This algorithm uses segmentation for cloud detection on an open-source dataset called Cloud95. U-Nets have become a standard approach for segmentation tasks and have also been shown to be effective on cloud screening tasks (Johannes Drönner, Nikolaus Korfhage, Sebastian Egli, Markus Mühling, Boris Thies, Jörg Bendix, Bernd Freisleben and Bernhard Seeger. Fast Cloud Segmentation Using Convolutional Neural Networks. Remote Sens. 2018).

However, there are constraints for using U-Nets in on-board processing, such as the enormous amount of parameters and high computational cost. A good trade-off between computational complexity/memory footprint and prediction accuracy had to be found. To achieve this, the number of parameters needed to be scaled down. This was accomplished through a reduction of the depth and the number of filters in convolution layers.

Another method involved using techniques from the MobileNetv2 architecture and adapting them to the U-Net architecture (Junfeng Jing, Zhen Wang, Matthias Rätsch and Huanhuan Zhang. Mobile-Unet: An efficient convolutional neural network for fabric defect detection. Textile Research Journal, 2020). Both methods were evaluated for their effectiveness. (See image 3, above.)

KATESU Project

The current commercial version of Klepsydra AI has successfully passed validation in an ESA activity called KATESU for Teledyne e2v’s LS1046 and Xilinx ZedBoard onboard computers, achieving outstanding performance results. During this activity, two DNN algorithms provided by ESA, CME and OBPMark-ML, were tested.

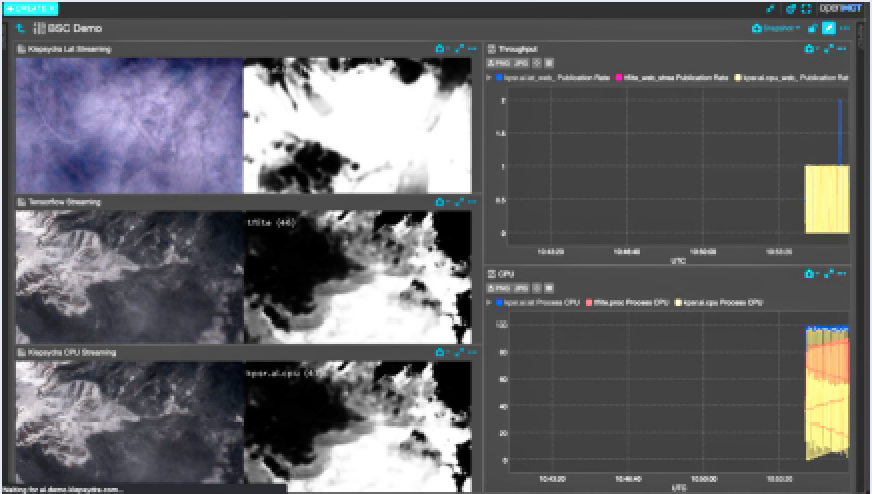

Image 3.

How To Run The Demo

Step 1

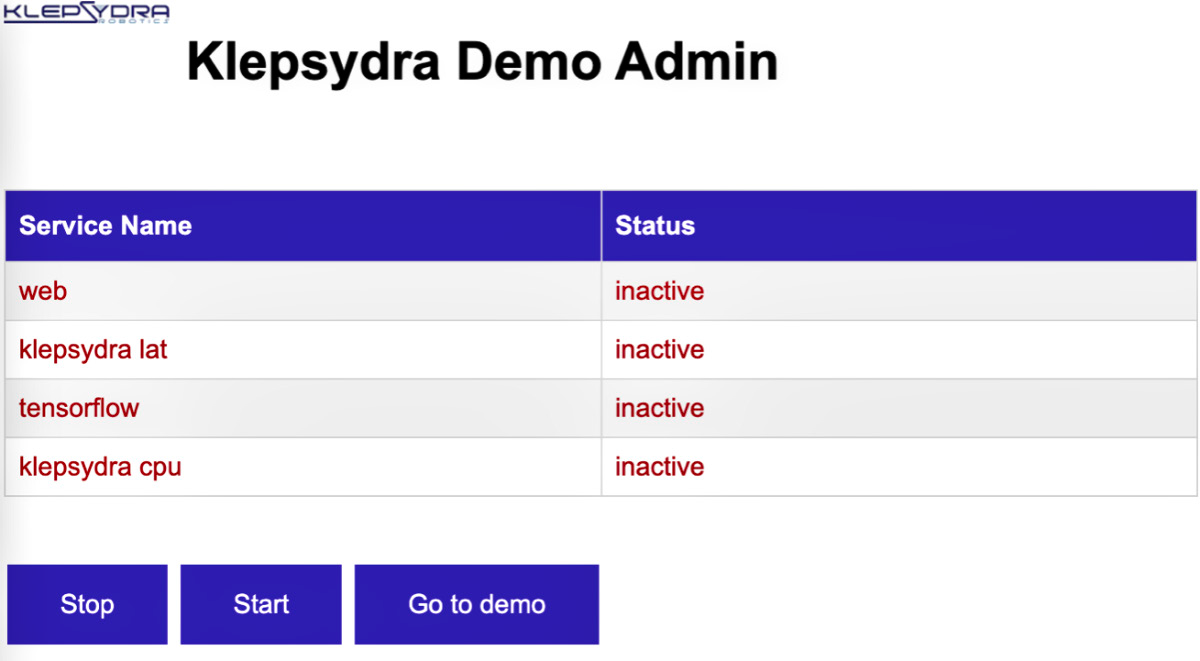

To access the DEMO, use one of the following links, depending on your processor type:

• Intel Processors: https://ai.demo.klepsydra.com/bsc-admin/

• ARM Processors: https://arm.demo.klepsydra.com/bsc-admin/

Once you click on the link, please wait for the table to load and display the status of the demo (active or inactive).

If the status is shown in green, the demo is running correctly. However, if the status is in red, the demo has either stopped or finished (it lasts 20 minutes).

If the demo is not active (RED), click on the start button and wait for 5 seconds for the demo to start.

Step 2

If all four boxes are green, and the text “OK” is displayed, it indicates that the demo has been started successfully. Once the demo is up and running with all the necessary processes, click on the “Go to demo” button.

That button takes you to the demo below...

Step 3

Once selected, a list of boxes will appear on the right. Click on the one named “BSC demo”.

Benefits Of Klepsydra AI

The demo showcases the Cloud Detection DNN model executed on three identical computers, each with a different optimization. The first computer runs Klepsydra optimized for latency (kpsr.lat), the second uses TensorFlow Lite, and the third uses Klepsydra optimized for CPU (kpsr.cpu).

Klepsydra AI demonstrates remarkable elasticity and high- performance capabilities. The kpsr.lat configuration can process as many as 2x more images per second than TensorFlow Lite, while kpsr.cpu processes the same number of images as TensorFlow Lite, but with fewer CPU resources. These improvements are evident in the Intel and ARM versions of the demo.

In summary, Klepsydra AI provides customers with a unique capability to adapt to their specific needs, whether it be latency, CPU,

RAM, or throughput. This feature makes Klepsydra AI highly suitable for onboard AI applications such as Earth Observation onboard data processing and compression, vision-based navigation for on-orbit servicing, and lunar landing.

Acknowledgments

This demo was prepared as part of ESA’s KATESU project to evaluate Klepsydra AI for Space use. For further information on this project, please access this direct link...

The OBPMark-ML DNN was provided to Klepsydra by cour- tesy of ESA. This algorithm is part of ESA’s OBPMark frame- work. For further information on this framework, please contact [email protected]

klepsydra.com

Author Isabel del Castillo has a MSc in Philosophy as well as 20 years of experience in Education and more than 10 years in management and accounting roles. In addition to financial tasks, she collaborates with business development activities and managing all non-technical related tasks.